FMP

How Professionals Quietly Evaluate Financial Modeling Prep Before Recommending It Internally

Jan 27, 2026

Recommending a new data vendor to your organization is a transfer of risk. You are essentially taking the vendor's technical debt and placing it on your own professional reputation. If the API breaks during a critical reporting window, or if the data coverage turns out to be spotty, the blame does not fall on the external sales team. It falls on the internal champion who vouched for the tool.

This dynamic creates a distinct, unwritten phase in the procurement cycle: the "shadow pilot." Before a manager is alerted or a procurement ticket is raised, a senior analyst or developer will often spend a week quietly testing the infrastructure. They do this on their own time to determine if the tool is robust enough to deserve political capital.

The quiet evaluation is as much about protecting personal credibility as it is about giving yourself permission to explore without pressure.

The "Desk Check" Using Core Reference Data

The first step in a quiet evaluation is establishing a baseline of trust using data you already know by heart. You do not start with complex, derivative metrics. You start with the fundamental identity of the companies you cover. If a vendor cannot get the static reference data right, they certainly cannot be trusted with dynamic pricing models.

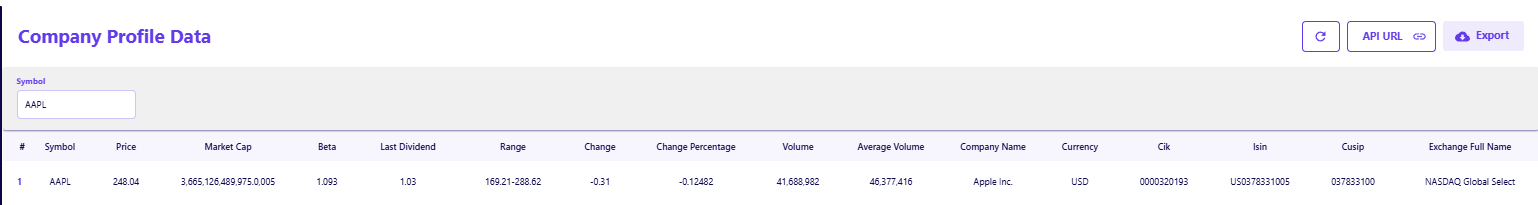

A common approach is to pull the profile of a widely held asset, such as Apple (AAPL), and audit the specific identifiers that often break downstream systems. The FMP Company Profile API allows you to instantly verify fields like the CIK (Central Index Key) or ISIN (International Securities Identification Number).

In many legacy systems, a mismatch in CIK mapping can cause a total failure in automated filing retrieval. By verifying that Financial Modeling Prep correctly maps Apple's CIK to 0000320193 and its ISIN to US0378331005, you are not just checking for typos. You are verifying that the vendor's taxonomy aligns with SEC standards.

Why Identifiers Matter

- System Interoperability: Incorrect ISINs break joins across SQL tables.

- Regulatory Compliance: Mismatched CIKs lead to pulling the wrong 10-K filings.

- Vendor Taxonomy: Standardized tags indicate a mature data infrastructure.

Validating Macro Economic Consistency

Once company-level data is verified, the next logical step in a private audit is to test the broader economic environment. Macro data is often where "scraping" errors become most visible. A responsible evaluator will cross-reference a vendor's economic outputs against primary government sources to check for lag or rounding errors.

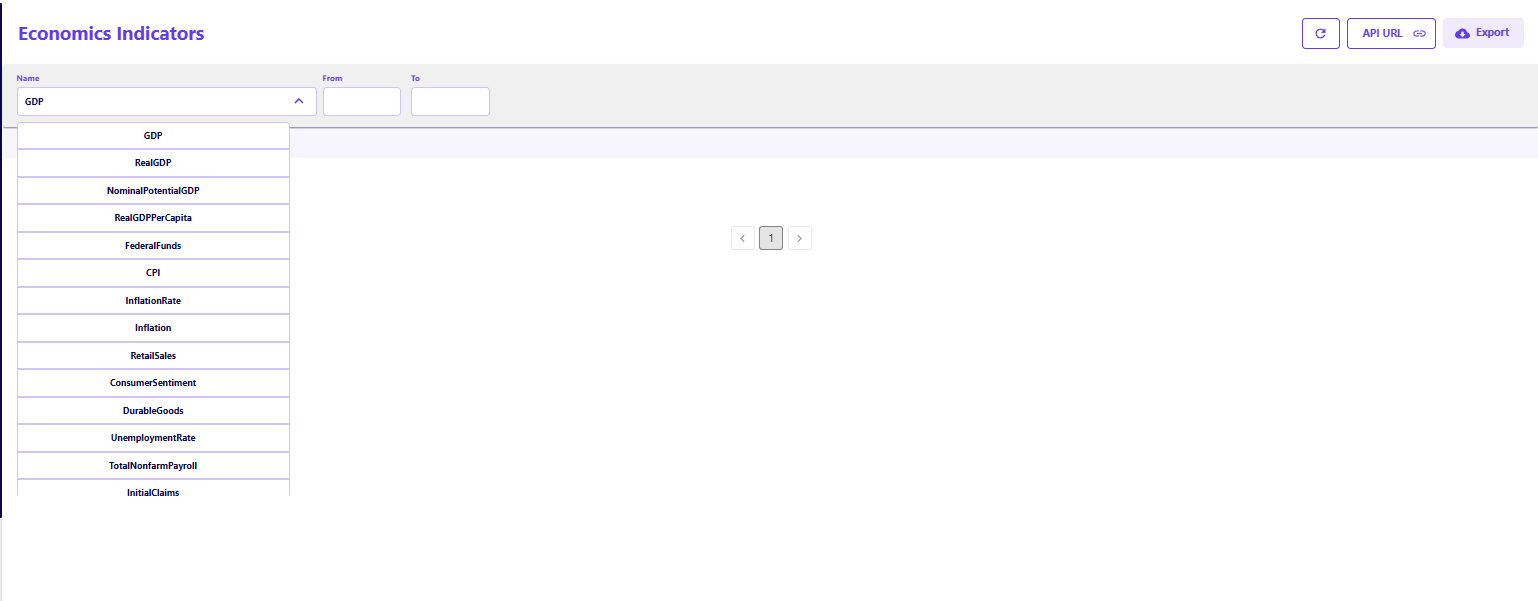

Using the FMP Economics API, an analyst can retrieve high-level indicators like Real GDP, CPI (Consumer Price Index), or the Federal Funds Rate. The test here is one of synchronization. When the Bureau of Economic Analysis releases a GDP revision, does it appear in the API payload within a reasonable window?

For example, checking the "InflationRate" or "UnemploymentRate" endpoints allows you to see if the data reflects the most recent print. If the API is serving stale economic figures while claiming to be real-time, it suggests a lack of maintenance on the backend pipelines. Verifying this alignment requires no code, just a simple query compared against a Federal Reserve release.

Verify the accuracy of your potential data source by comparing a known economic indicator against a government release today.

The "Monday Morning" Stability Test

Reliability is not just about data accuracy; it is about availability. A tool that works perfectly on a Saturday afternoon but times out during the market open is useless in a professional environment. The "Monday Morning" test is a crucial part of the quiet evaluation.

This involves running a set of standard queries during peak usage hours—typically right at the market open on Monday or Friday. You are looking for latency spikes or 5xx server errors. This stress test simulates the actual operational load the API would face if integrated into your firm's production environment.

What to Monitor

- Response Time: Does latency degrade when volume increases?

- Timeout Rate: Do queries hang indefinitely or fail fast?

- Consistency: Does the payload structure remain identical under load?

Transitioning from Exploration to Advocacy

The goal of the quiet evaluation is to reach a state of high conviction. You are looking for the moment when the risk of recommending the tool drops below the value it provides. This usually happens when you have successfully replicated a specific internal workflow—like a valuation model or a risk dashboard—using the new data source.

When you finally speak to your manager or team lead, you are not saying, "I think we should look at this tool." You are saying, "I have already validated that this tool solves our specific data gap, matches our internal identifiers, and handles peak load." This shift in framing changes the conversation from a speculative research project to a concrete implementation plan.

The Strategic Value of Private Validation

The quiet evaluation is a hallmark of professional diligence. It respects the limited bandwidth of your organization by filtering out noise before it becomes a distraction. By rigorously testing Financial Modeling Prep against your own standards—checking identifier mappings, verifying economic indicators, and testing stability—you ensure that your recommendation carries weight. You do the hard work in private so that the public implementation is seamless.

Frequently Asked Questions

Why do professionals evaluate tools privately first?

Private evaluation protects your professional reputation. It ensures you only recommend tools that you have personally validated, preventing you from vouching for software that might later fail or cause integration headaches.

What is the most effective way to test data accuracy quickly?

Compare "static" reference data for a major company against your internal records. If fields like CIK, ISIN, or sector classification match perfectly, it indicates the vendor uses standard, reliable taxonomies.

How can I test API stability without technical support?

Run your queries during market open or other high-traffic times. Consistent response times during these windows are a better indicator of infrastructure health than off-hours testing.

What specific data points should I check to verify macro data?

Compare high-visibility indicators like GDP, the Federal Funds Rate, or CPI against the official releases from government websites. Discrepancies here are immediate red flags for data quality.

When is the right time to bring the tool to my team?

You should present the tool only after you have confirmed it solves a specific problem and integrates cleanly with your current workflow. The recommendation should be based on proof, not just potential.

Does a quiet evaluation require a paid subscription?

Often, no. The validation of core data structures and response formats can usually be done using free or low-tier access, allowing you to assess quality without a budget request.

What is the biggest risk of skipping the private evaluation?

The biggest risk is reputational damage. If you champion a tool that subsequently breaks a production workflow, your judgment regarding future infrastructure decisions will be questioned.

Signals Desk Weekly Take via FMP API | Five Companies With Persistent Earnings Beats (Jan 19-23)

This week’s earnings scan wasn’t about surprise magnitude—it was about persistence. As capital rotates toward operators ...

Common Reasons New Financial Modeling Prep Users Get Stuck and How to Avoid Them

The moment a developer or analyst receives an API key is usually the moment of highest momentum. The registration is com...