FMP

What a Responsible “Test Drive” of Financial Modeling Prep Looks Like

Jan 27, 2026

The traditional data procurement cycle is broken. It usually involves three discovery calls, a signed NDA, and a two-week wait just to see a sample dataset that might not even fit your schema. This friction forces analysts to make decisions based on sales decks rather than technical reality.

A responsible evaluation flips this dynamic. It prioritizes autonomy and direct verification over vendor promises. The goal is to determine utility before you ever speak to a sales representative.

For a platform like Financial Modeling Prep, the most effective test drive is silent, small-scale, and ruthlessly focused on failure points. It allows you to validate the infrastructure on your own terms, protecting your time and political capital.

The Case for Autonomous Evaluation

Waiting for permission to test a data source is a strategic error. By the time a formal pilot is approved, you could have already determined if the data is viable. Autonomous evaluation shifts the risk curve downward because it requires no organizational buy-in to start.

Shifting the Risk Curve

A "silent" test drive creates leverage. You are not asking your manager for budget; you are presenting a validated solution that is already running in a staging environment. This approach favors tools that offer instant API key provisioning and transparent documentation over those hiding behind gated access.

Gaining Political Leverage

When you evaluate independently, you control the narrative. You avoid the pressure of a vendor-managed "success criteria" document. Instead, you only recommend solutions you have personally broken and fixed. This ensures you test the actual API capabilities, not a curated "sandbox" environment designed to look perfect.

Defining the Scope of the Test

A common mistake is trying to ingest the entire universe of data on day one. This leads to analysis paralysis. A responsible test drive focuses on a narrow, representative slice of the market that acts as a proxy for the whole.

Instead of indiscriminately pulling feeds, start by understanding stock market data sets to distinguish between what requires real-time precision and what can be handled with historical baselines.

The Control Group Strategy

You should select a "control group" of assets tickers or indices where you already possess clean, trusted data. If the new source matches your control group, you have established a baseline of trust.

- The Blue Chips: Pick 5-10 major equities to verify standard data flow.

- The Exotics: Pick an asset class you struggle to source, such as commodities or forex pairs, to test breadth.

Targeting the Edge Cases

Commoditized data is easy to get right; edge cases are where infrastructure breaks. Select 2-3 companies with recent splits, mergers, or complex ticker changes. If the API handles these correctly, the rest of the dataset is likely stable.

Case Study: The 15-Minute Infrastructure Audit

To demonstrate this methodology, we conducted a rapid audit of Financial Modeling Prep using a standard "Control Group" approach. The goal was not to build a model, but to verify three structural pillars: Identity, Economics, and Continuity.

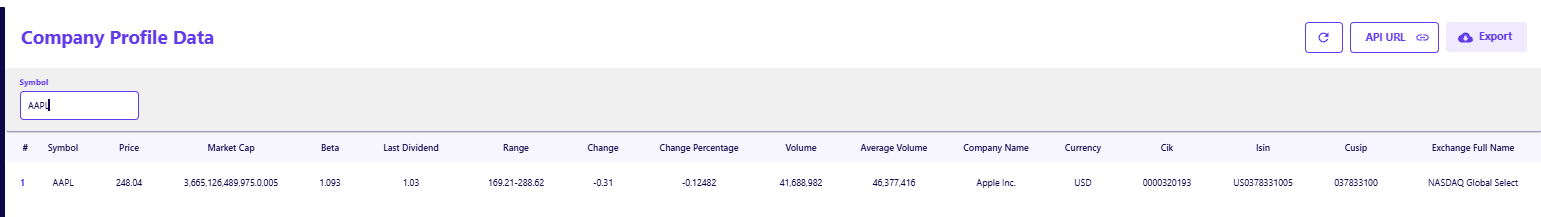

Step 1: Verifying Identity and Taxonomy

We began by querying the Company Profile endpoint for a major liquid asset (Apple Inc.). The objective was to confirm that the vendor provides robust, standardized identifiers necessary for joining tables in a SQL environment.

As seen in the API output below, the response includes critical mapping fields:

- CIK (0000320193): Essential for linking price data to SEC filings.

- ISIN (US0378331005): Required for global compliance and cross-border portfolio management.

- Currency (USD): Explicitly stated, preventing dangerous assumptions in multi-currency models.

This level of detail confirms that the dataset is built for data-driven enterprises that require precise entity resolution, not just retail display.

Step 2: The Macro Consistency Check

Next, we tested the breadth of the Economic Indicator endpoints. A responsible test drive must ensure that macro data is accessible programmatically, rather than requiring manual downloads from government sites.

The endpoint successfully enumerated key indicators including Real GDP, Federal Funds Rate, and CPI. The ability to pull these indicators via the same API token used for equity pricing reduces the need for multiple vendor contracts.

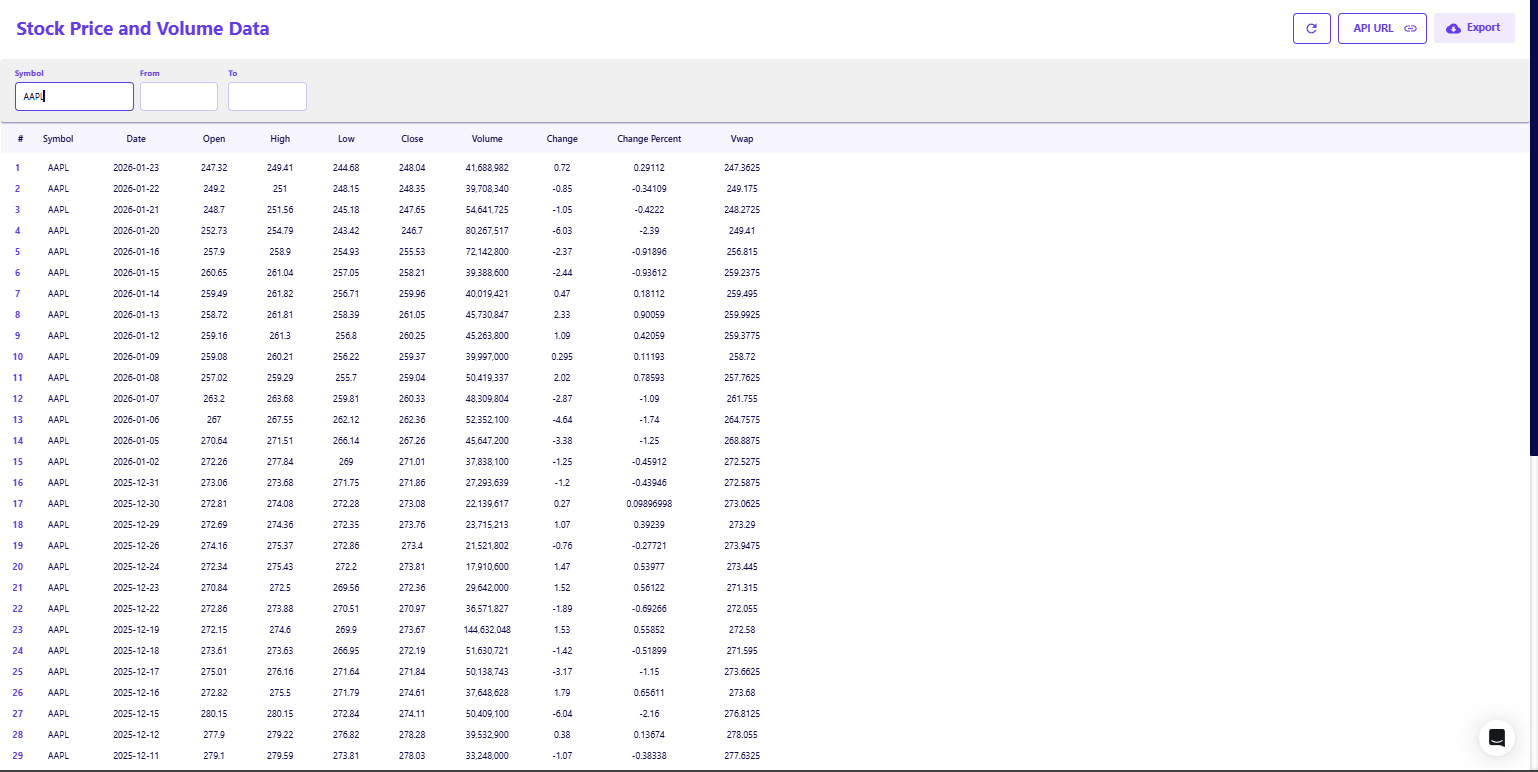

Step 3: The Historical Stress Test

Finally, we audited the historical market data for continuity. We targeted the Historical Daily Price Full endpoint to check for gaps or null values during active trading days.

The returned dataset showed a complete, unbroken sequence of trading days with consistent floating-point precision for Open, High, Low, and Close values.

By validating these three pillars Identity, Macro, and History in under fifteen minutes, we established a high-conviction baseline without writing a single line of production code.

The Exit Criteria

A responsible test drive must have a clear ending. You are not "playing around" with data; you are answering a binary question: Is this tool worth a production budget?

Defining Deal-Breakers

You should have a defined list of deal-breakers written down before you run your first query. If the tool hits a deal-breaker, you stop. This discipline prevents the "sunk cost" trap where you keep fixing a bad data feed just because you have already spent time on it.

The Scorecard Approach

Your scorecard should be simple and binary:

- Latency: Must be under X milliseconds for standard queries.

- Coverage: Must include 100% of the priority asset list.

- Documentation: Must resolve 90% of integration questions without support tickets.

The Strategic Value of "No"

The modern financial workflow demands tools that respect your autonomy. By conducting a disciplined, independent test drive of Financial Modeling Prep, you remove the guesswork from vendor selection.

You replace vague assurances with hard evidence gathered from your own code. This process does not just save time; it ensures that when you finally advocate for a tool, you do so with the unshakeable confidence of someone who has already seen it work. If the tool fails your test, you walk away with zero cost. If it passes, you implement it with certainty.

Frequently Asked Questions

What is the primary benefit of an autonomous data evaluation?

Autonomous evaluation allows you to verify technical compatibility and data quality before involving procurement or management. It removes the pressure of a formal sales cycle and lets the data speak for itself.

How much time should I allocate to a test drive?

A focused evaluation should take no more than a few days. If you are spending weeks cleaning data just to see if it works, the tool has likely already failed the test.

Why is it important to test "edge cases" first?

Commoditized data (like Apple's closing price) is easy to get right. Vendors differ in how they handle complex events like spin-offs, delistings, and ticker changes. Testing these first reveals the true quality of the infrastructure.

Do I need a full engineering team to test Financial Modeling Prep?

No. The API is designed to be accessible for individual analysts using Python, R, or even Excel. You can validate the core value proposition on your own before asking for engineering resources.

What is the most overlooked aspect of data API testing?

Documentation quality. If you cannot understand how to construct a query without emailing support, the "maintenance cost" of that data source will be incredibly high over the long term.

Can I evaluate the API using the free tier?

Yes, the free tier is sufficient for validating the data structure, response format, and integration logic. Once you confirm the "plumbing" works, you can upgrade to access deeper history or premium endpoints.

Common Reasons New Financial Modeling Prep Users Get Stuck and How to Avoid Them

The moment a developer or analyst receives an API key is usually the moment of highest momentum. The registration is com...

Understanding ETF Risk Through Holdings, Not Tickers

ETF risk analysis often starts and ends at the ticker level. Metrics such as historical volatility, beta, tracking error...